Test Workflows - Services

This Workflows functionality is not available when running the Testkube Agent in Standalone Mode - Read More

Often if your use-case is more complex, you may need additional services for the Tests you are running. Common use cases are:

- Database, i.e. MongoDB or PostgreSQL

- Workers, i.e. remote JMeter workers or Selenium Grid's remote browsers

- Service under test, i.e. your API to run E2E tests against it

Testkube allows you to run such services for the Test Workflow, communicate with them and debug smoothly.

If you are using TestContainers to manage dependencies for your tests, check out this example for how to use TestContainers from your TestWorkflows.

How it works

When you define the service, the Test Workflow is creating a new pod and any other require resources for each of instances, read its status and logs, and provides its information (like IP) for use in further steps. After the service is no longer needed, it's cleaned up.

As the services are started in a separate pod, they don't share the file system with the Test Workflow execution. There are multiple ways to share data with them - either using one of techniques described below, or advanced Kubernetes' native ways like ReadWriteMany volumes.

Syntax

To add some services, you need to specify the services clause.

It can be either directly on the spec level (to be available for the whole execution), or on specific step

(to isolate it) - see Schema Reference.

You may want to use services in Test Workflow Template, to reuse them for multiple tests.

- YAML

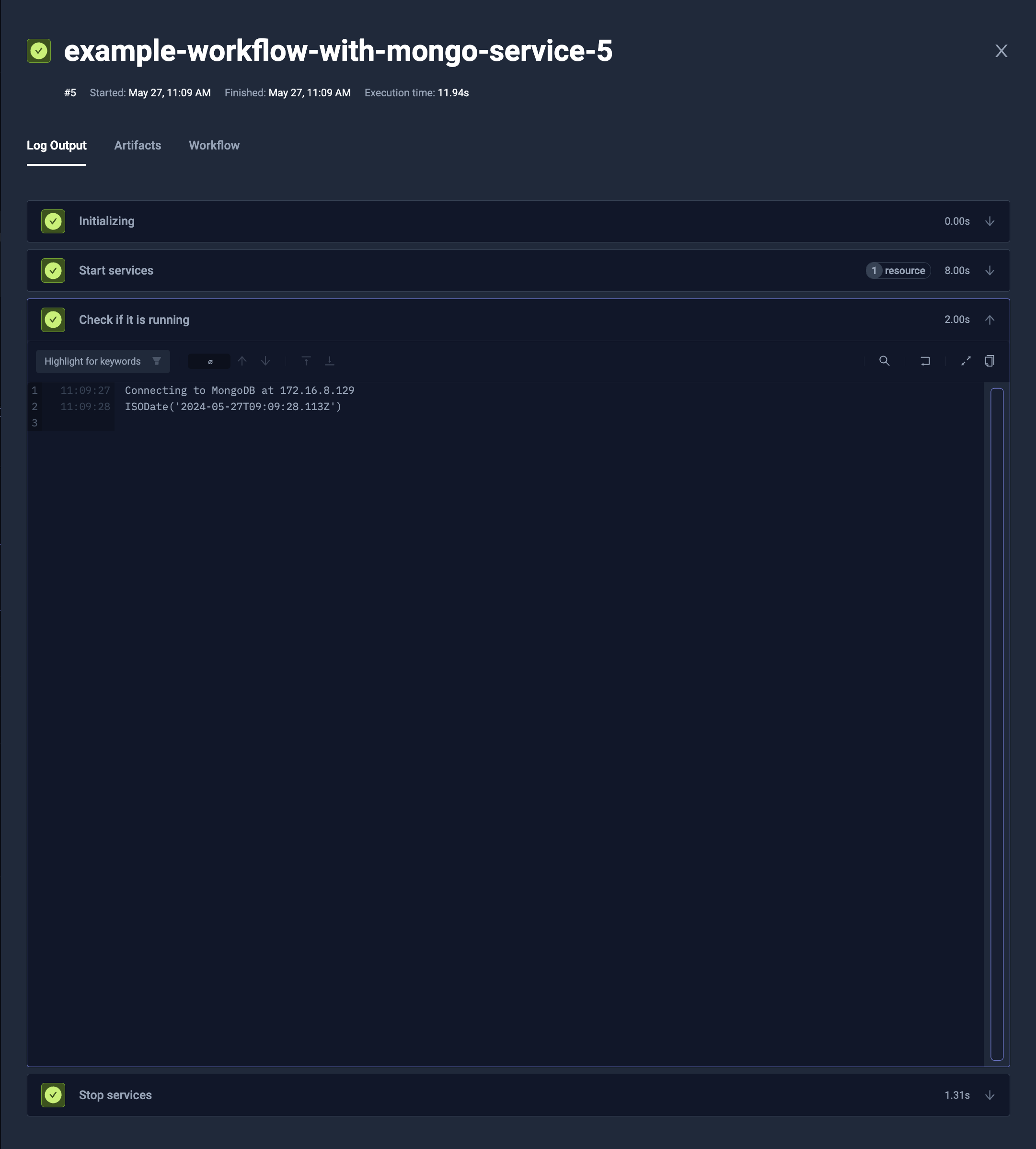

- Log Output

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: example-workflow-with-mongo-service

spec:

services:

db:

timeout: 5m

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

value: root

- name: MONGO_INITDB_ROOT_PASSWORD

value: p4ssw0rd

readinessProbe:

tcpSocket:

port: 27017

periodSeconds: 1

steps:

- name: Check if it is running

run:

image: mongo:latest

shell: |

echo Connecting to MongoDB at {{ services.db.0.ip }}

mongosh -u root -p p4ssw0rd {{ services.db.0.ip }} --eval 'db.serverStatus().localTime'

Connecting to the services

To connect to create services, you can simply use services.<SVC_NAME>.<INDEX>.ip expression in the place you need its address (i.e. environment variable, or shell command).

services.db.0.ipwill returnstring- IP of the 1st instance of thedbserviceservices.db.*.ipwill return[]string- list of IPs of all thedbservice instances

Basic configuration

The service allows similar fields as the run command, i.e.:

image,env,volumeMounts,resources- to configure the containercommand,args- to specify command to runshell- to specify script to run (instead ofcommand/args)descriptionthat may provide human-readable information for each instance separately

Fetching logs

By default we are not saving the logs for the services. If you would like to fetch the logs, you can use logs property.

It takes an expression condition, so you can dynamically choose whether it should be saved or not. Often you will use:

logs: alwaysto always store the logslogs: failedto store the logs only if the Test Workflow has failed

Pod configuration

The service is started as a separate pod, so you can configure pod similarly to the Test Workflow's configuration.

kind: TestWorkflow

apiVersion: testworkflows.testkube.io/v1

metadata:

name: example-workflow-with-mongo-service

spec:

services:

db:

pod:

tolerations:

- key: pool

operator: Equal

value: system

effect: NoSchedule

timeout: 6m

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

value: root

- name: MONGO_INITDB_ROOT_PASSWORD

value: p4ssw0rd

readinessProbe:

tcpSocket:

port: 27017

periodSeconds: 1

steps:

- name: Check if it is running

run:

image: mongo:latest

shell: |

echo Connecting to MongoDB at {{services.db.0.ip}}

mongosh -u root -p p4ssw0rd {{services.db.0.ip}} --eval 'db.serverStatus().localTime'

Lifecycle

You can apply readinessProbe to ensure that the service will be available for the next step.

The Test Workflow won't continue until the container will be ready then. To ensure that the execution won't get stuck, you can add timeout property (like timeout: 1h30m20s),

so it will fail if the service is not ready after that time.

The timeout duration encompasses all stages from the creation of the Kubernetes pod to executing the test request against the service. This includes scheduling the pod on a node, pulling the required image, waiting for the pod to become ready, and ultimately sending the test request. When starting your service for the first time, consider the time needed to pull the Docker image, which depends on its size. Additionally, if the necessary Kubernetes resources are unavailable and a new node needs to be spun up, this will further increase the startup time. Be sure to account for these factors when setting the timeout value.

Matrix and sharding

The services are meant to support matrix and sharding, to run multiple replicas and/or distribute the load across multiple instances.

It is supported by regular matrix/sharding properties (matrix, shards, count and maxCount).

You can read more about it in the general Matrix and Sharding documentation.

Step-level Services and dependencies

Services can also be defined on the step level, making them available only within the corresponding step, which enables

- Service isolation across steps

- Service dependencies, for example, if your step-level service(s) need to connect to a Workflow-level service.

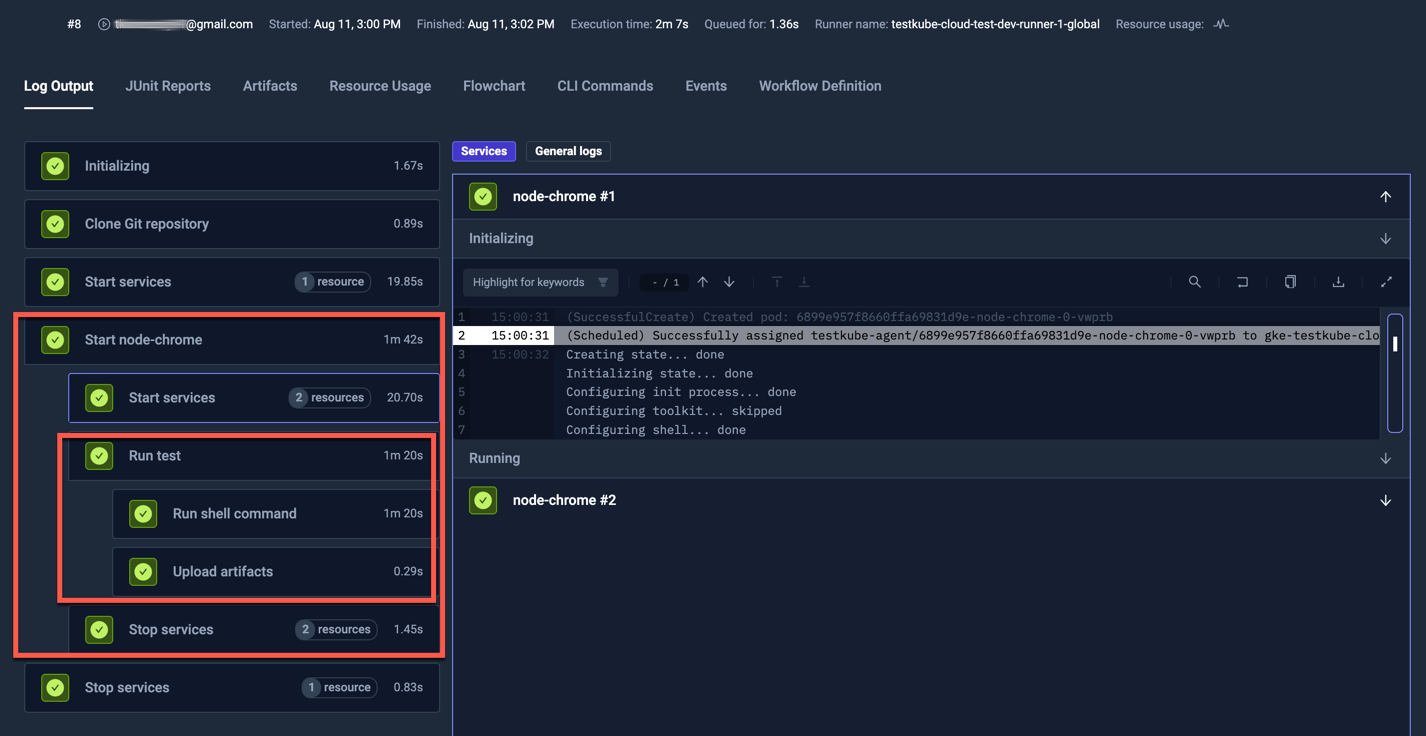

The following example shows a Workflow that starts a Selenium Hub service at the Workflow level and

then starts two Selenium Chrome-Nodes as step-level services, connecting to the Selenium Hub service using {{ services.hub.0.ip }}

as the hub's IP address, and using nested steps to execute the actual test using these nodes.

kind: TestWorkflow

apiVersion: testworkflows.testkube.io/v1

metadata:

name: selenium-java-hub-and-nodes

description: "Selenium Java + Hub + Chrome Nodes"

spec:

config:

chromeNodes: {type: integer, default: 2}

junitParallelism: {type: integer, default: 4}

content:

git:

uri: https://github.com/kubeshop/testkube

revision: main

paths:

- test/selenium/selenium-java

container:

image: maven:3.9.11-eclipse-temurin-17

workingDir: /data/repo/test/selenium/selenium-java

resources:

requests:

cpu: 1

memory: 512Mi

services:

hub:

image: selenium/hub:4.34.0-20250727

timeout: 120s

logs: always

resources:

requests:

cpu: 300m

memory: 256Mi

readinessProbe:

httpGet:

path: /status

port: 4444

periodSeconds: 1

steps:

- name: Start node-chrome

services:

node-chrome:

image: selenium/node-chrome:112.0-chromedriver-112.0-grid-4.34.0-20250727

timeout: 120s

logs: always

count: config.chromeNodes

resources:

requests:

cpu: 500m

memory: 512Mi

readinessProbe:

httpGet:

path: /status

port: 5555

periodSeconds: 1

env:

- name: SE_EVENT_BUS_HOST

value: '{{ services.hub.0.ip }}'

- name: SE_EVENT_BUS_PUBLISH_PORT

value: "4442"

- name: SE_EVENT_BUS_SUBSCRIBE_PORT

value: "4443"

steps:

- name: Run test

run:

env:

- name: REMOTE_WEBDRIVER_URL

value: 'http://{{ services.hub.0.ip }}:4444/wd/hub'

- name: BROWSER

value: 'chrome'

- name: JUNIT_JUPITER_EXECUTION_PARALLEL_ENABLED

value: "true"

- name: JUNIT_JUPITER_EXECUTION_PARALLEL_MODE_DEFAULT

value: concurrent

- name: JUNIT_JUPITER_EXECUTION_PARALLEL_MODE_CLASSES_DEFAULT

value: concurrent

- name: JUNIT_JUPITER_EXECUTION_PARALLEL_CONFIG_STRATEGY

value: fixed

- name: JUNIT_JUPITER_EXECUTION_PARALLEL_CONFIG_FIXED_PARALLELISM

value: "{{ config.junitParallelism }}"

shell: |

mvn test

mvn surefire-report:report

artifacts:

paths:

- target/site/**/*

- target/surefire-reports/**/*

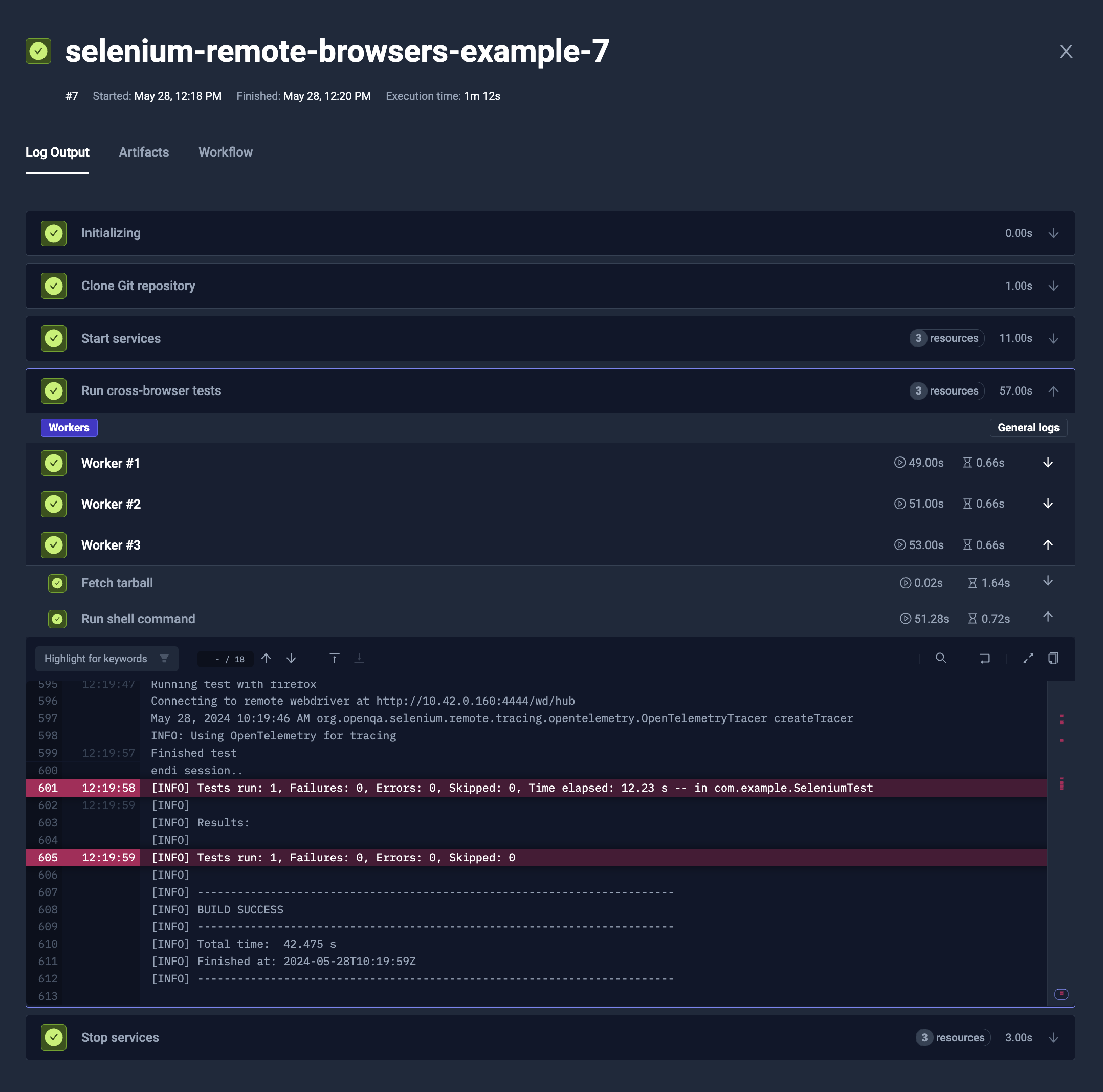

The output of this execution looks as follows:

Providing content

There are multiple ways to provide the files inside the services.

As the services are started in a separate pod, they don't share the file system with the Test Workflow execution.

Copying content inside

It is possible to copy the files from the original Test Workflow into the services. As an example, you may want to fetch the repository and install the dependencies on the original TestWorkflow, and then distribute it to the services.

To do so, you can use transfer property. It takes list of files to transfer:

{ from: "/data/repo/build" }will copy the/data/repo/builddirectory from execution's Pod into/data/repo/buildin the service's Pod{ from: "/data/repo/build", to: "/out" }will copy the/data/repo/builddirectory from execution's Pod into/outin the service's Pod{ from: "/data/repo/build", to: "/out", "files": ["**/*.json"] }will copy only JSON files from the/data/repo/builddirectory from execution's Pod into/outin the service's Pod

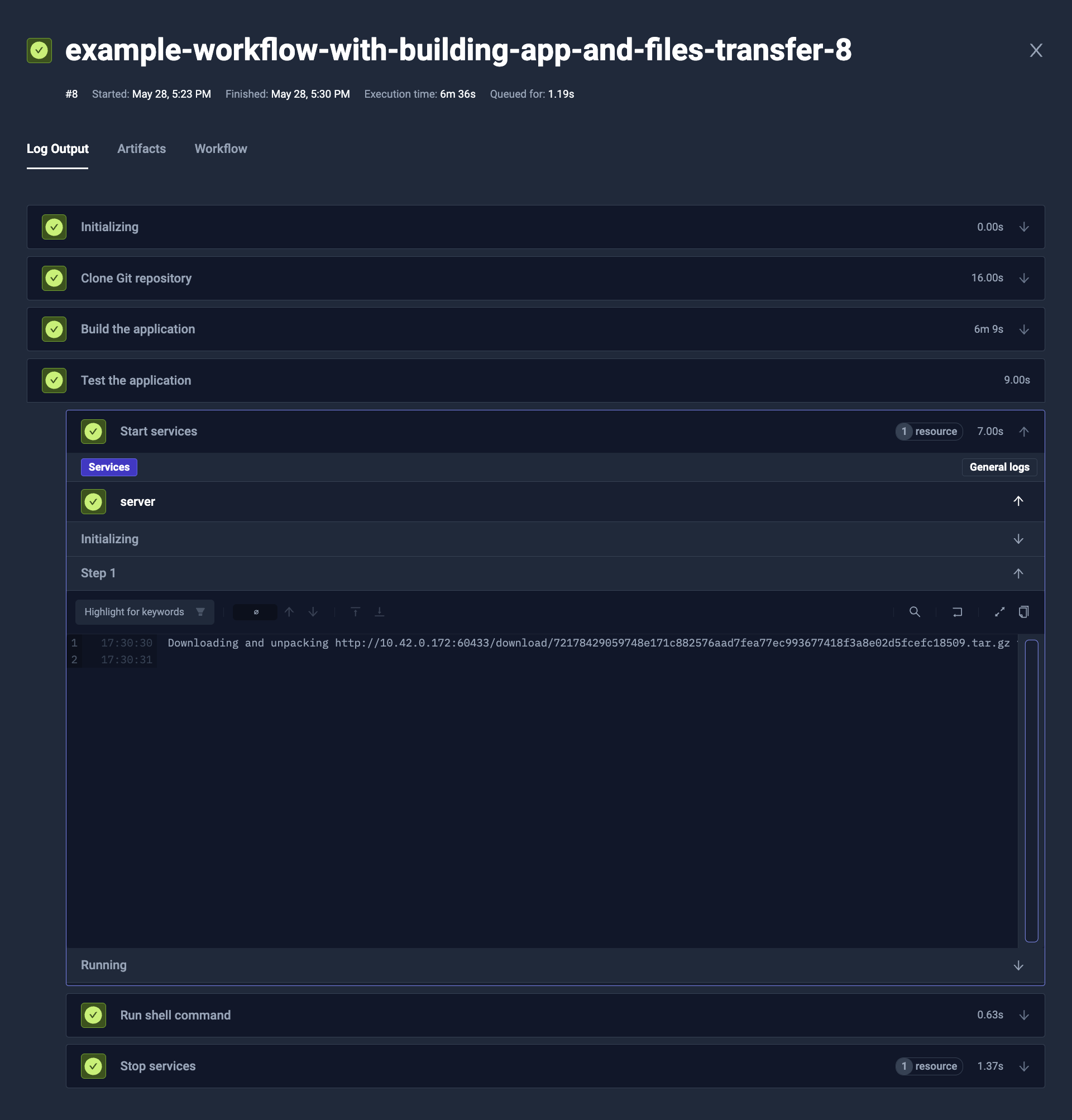

Example

- YAML

- Log Output

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: example-workflow-with-building-app-and-files-transfer

spec:

content:

git:

uri: https://github.com/kubeshop/testkube-docs.git

revision: main

container:

workingDir: /data/repo

resources:

requests:

cpu: 1

memory: 2Gi

steps:

- name: Build the application

run:

image: node:21

shell: npm i && npm run build

- name: Test the application

services:

server:

timeout: 1m

transfer:

- from: /data/repo/build

to: /usr/share/nginx/html

image: nginx:1.25.4

logs: always

readinessProbe:

httpGet:

path: /

port: 80

periodSeconds: 1

steps:

- shell: wget -q -O - {{ services.server.0.ip }}

Static content or a Git repository

Services allow to provide the content property similar to the one directly in the Test Workflow. As an example, you may provide static configuration files to the service:

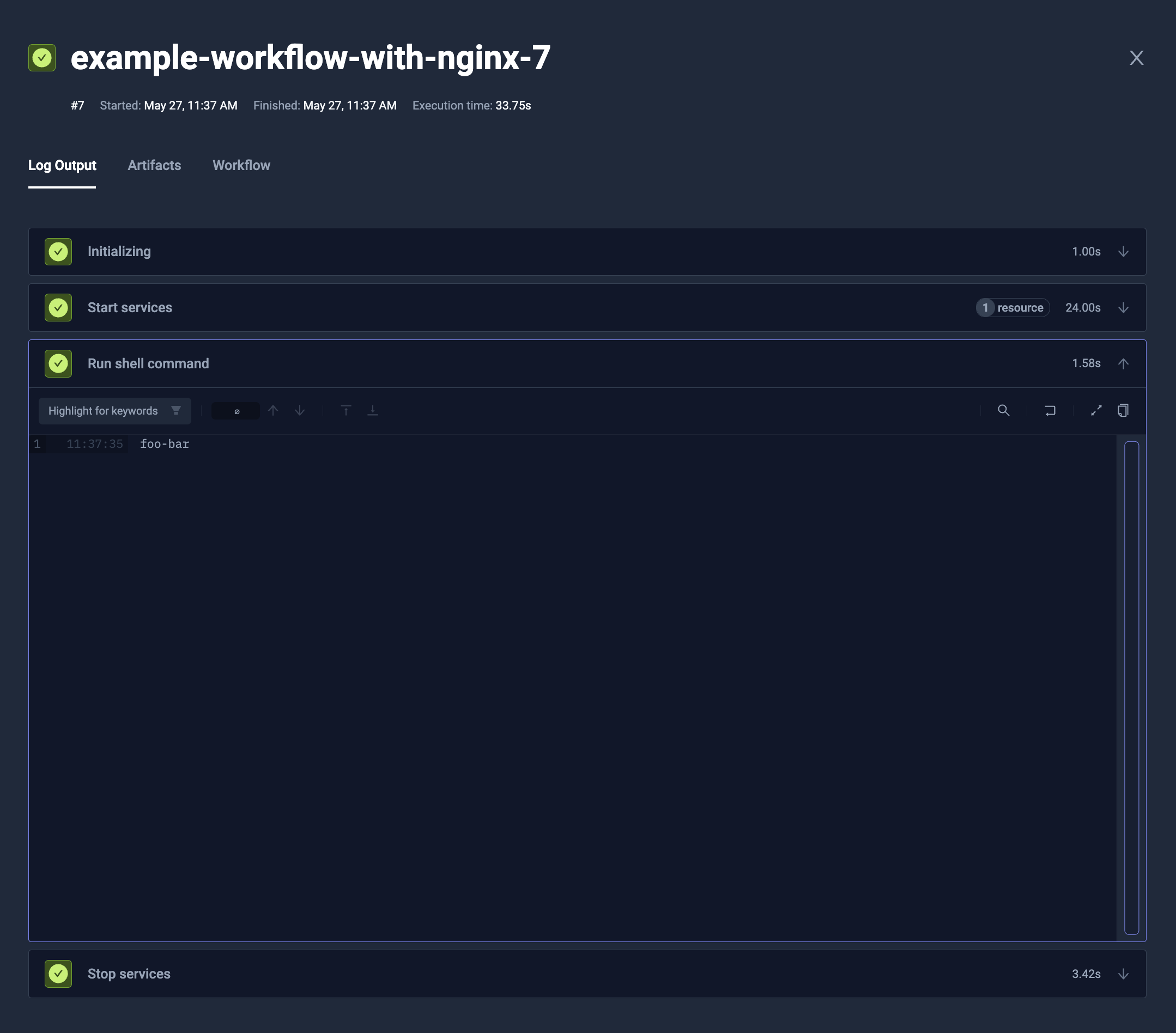

- YAML

- Log Output

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: example-workflow-with-nginx

spec:

services:

http:

timeout: 5m

content:

files:

- path: /etc/nginx/nginx.conf

content: |

events { worker_connections 1024; }

http {

server {

listen 8888;

location / { root /www; }

}

}

- path: /www/index.html

content: "foo-bar"

image: nginx:1.25.4

readinessProbe:

httpGet:

path: /

port: 8888

periodSeconds: 1

steps:

- shell: wget -q -O - {{ services.http.0.ip }}:8888

Examples

JMeter with distributed Remote Workers

You can easily run JMeter with distributed remote workers, that could be even spread evenly across all the Kubernetes nodes.

The example below:

- Read JMX configuration from Git repository (

spec.content.git) - Start 5 remote workers (

spec.services.slave.count)- Distribute them evenly across nodes (

spec.services.slave.use[0]-distribute/evenlytemplate is setting commonpod.topologySpreadConstraints) - Reserve 1/8 CPU and 128MB memory for each instance (

spec.services.slave.container.resources) - Wait until they will accept connection at port 1099 (

spec.services.slave.readinessProbe)

- Distribute them evenly across nodes (

- Run JMeter controller against all the remote workers (

spec.services.steps[0].run)- It uses

{{ services.slave.*.ip }}as an argument -services.slave.*.ipwill return list of IPs, and they will be joined by comma (,) to convert to text

- It uses

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: distributed-jmeter-example

spec:

content:

git:

uri: https://github.com/kubeshop/testkube

revision: main

paths:

- test/jmeter/jmeter-executor-smoke.jmx

container:

workingDir: /data/repo/test/jmeter

services:

slave:

use:

- name: distribute/evenly

count: 5

timeout: 30s

image: alpine/jmeter:5.6

command:

- jmeter-server

- -Dserver.rmi.localport=60000

- -Dserver_port=1099

- -Jserver.rmi.ssl.disable=true

container:

resources:

requests:

cpu: 128m

memory: 128Mi

readinessProbe:

tcpSocket:

port: 1099

periodSeconds: 1

steps:

- name: Run tests

run:

image: alpine/jmeter:5.6

shell: |

jmeter -n \

-X -Jserver.rmi.ssl.disable=true -Jclient.rmi.localport=7000 \

-R {{ services.slave.*.ip }} \

-t jmeter-executor-smoke.jmx

Selenium tests with multiple remote browsers

You can initialize multiple remote browsers, and then run tests against them in parallel.

The example below:

- Clone the test code (

content) - Start 3 instances of

remoteservice (services.remote)- Each instance have different browser used (

imageofservices.remote.matrix.browserpassed toservices.remote.image) - Each instance expose the driver name in their description (

driverofservices.remote.matrix.browserpassed toservices.remote.description) - Wait until the browser is ready to connect (

services.remote.readinessProbe) - Always save the browser logs (

services.remote.logs)

- Each instance have different browser used (

- Run tests in parallel for each of the browser (

steps[0].parallel)- Run for each

remoteservice instance (steps[0].parallel.matrix.browser) - Transfer the code from the repository to the parallel step (

steps[0].parallel.transfer) - Sets the environment variables based on the service instance's description and IP (

steps[0].parallel.container.env) - Run tests (

steps[0].parallel.shell)

- Run for each

- YAML

- Log Output

kind: TestWorkflow

apiVersion: testworkflows.testkube.io/v1

metadata:

name: selenium-remote-browsers-example

spec:

content:

git:

uri: https://github.com/cerebro1/selenium-testkube.git

paths:

- selenium-java

services:

remote:

matrix:

browser:

- driver: chrome

image: selenium/standalone-chrome:4.21.0-20240517

- driver: edge

image: selenium/standalone-edge:4.21.0-20240517

- driver: firefox

image: selenium/standalone-firefox:4.21.0-20240517

logs: always

image: "{{ matrix.browser.image }}"

description: "{{ matrix.browser.driver }}"

readinessProbe:

httpGet:

path: /wd/hub/status

port: 4444

periodSeconds: 1

steps:

- name: Run cross-browser tests

parallel:

matrix:

browser: 'services.remote'

transfer:

- from: /data/repo/selenium-java

container:

workingDir: /data/repo/selenium-java

image: maven:3.9.6-eclipse-temurin-22-alpine

env:

- name: SELENIUM_BROWSER

value: '{{ matrix.browser.description }}'

- name: SELENIUM_HOST

value: '{{ matrix.browser.ip }}:4444'

shell: mvn test

Run database for integration tests

To test the application, you often want to check if it works well with the external components too. As an example, unit tests won't cover if there is a syntax error in SQL query, or there are deadlocks in the process, unless you will run it against actual database.

The example below:

- Start single MongoDB instance as

dbservice (services.db)- Configure initial credentials to

root/p4ssw0rd(services.db.env) - Wait until the MongoDB accept connections (

services.db.readinessProbe)

- Configure initial credentials to

- Run integration tests (

steps[0].run)- Configure

API_MONGO_DSNenvironment variable to point to MongoDB (steps[0].run.env[0]) - Install local dependencies and run tests (

steps[0].run.shell)

- Configure

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: database-service-example

spec:

content:

git:

uri: https://github.com/kubeshop/testkube.git

revision: develop

services:

db:

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

value: root

- name: MONGO_INITDB_ROOT_PASSWORD

value: p4ssw0rd

readinessProbe:

tcpSocket:

port: 27017

periodSeconds: 1

container:

workingDir: /data/repo

steps:

- name: Run integration tests

run:

image: golang:1.22.3-bookworm

env:

- name: API_MONGO_DSN

value: mongodb://root:p4ssw0rd@{{services.db.0.ip}}:27017

shell: |

apt-get update

apt-get install -y ca-certificates libssl3 git skopeo

go install gotest.tools/gotestsum@v1.9.0

INTEGRATION=y gotestsum --format short-verbose -- -count 1 -run _Integration -cover ./pkg/repository/...